Approximately 402.74 million terabytes of data are created every day around the world. Such an enormous volume has become both an opportunity and a challenge for businesses trying to derive meaningful insights from their information assets.

Traditional data integration methods simply cannot keep pace with this explosion of data. In fact, the global AI market is projected to reach US$1.01 trillion by 2031, growing at an impressive CAGR of 26.60%, largely because AI offers solutions to these data management challenges. With AI-driven data integration, businesses can automate ETL processes and perform data ingestion, transformation, and mapping in real time.

What makes this approach even more valuable is the ability of AI to process large volumes of data instantly and accurately, which is crucial in industries such as finance, healthcare, and e-commerce, where real-time insights drive competitive advantage. Additionally, AI models offer adaptability and self-learning capabilities that continuously improve your data integration systems without significant human labour.

In this how-to article, learn how AI is changing the course of data integration and explore practical guidance for deploying modern data integration platforms within your organisation. Whether you are evaluating AI-powered data integration platforms or planning a broader enterprise strategy for intelligent data workflows, this article will help you understand your options and make informed implementation decisions.

The growing requirement for smarter data integration

Modern businesses are drowning in data. While the average company today uses more than 400 different data sources for analytics, many organisations need access to over 1,000 sources to meet their actual requirements. This flood of information calls for smarter and more efficient ways to integrate data.

The rise of big data and real-time demands

Data is being generated from every type of source at an unprecedented rate, estimated at 328.77 million terabytes daily worldwide. At the same time, customer expectations have fundamentally changed—we have moved from a world where weekly reports were acceptable to one where instant insights are the norm.

“Think about how we used to sit down to watch the nightly news once a day. Now we have access to news around the clock. We expect to know when things happen in real time,” notes Mindy Ferguson, Vice President of Messaging and Streaming at AWS.

Different use cases require different processing speeds:

- Fraud detection: <1 second latency

- Patient monitoring: <1 second latency

- Customer experience applications: <10 seconds latency

- Business intelligence: <15 minutes latency

Why Traditional Methods Come Up Short

Traditional approaches to integrating data simply cannot scale with modern challenges. Forcing all data into a single structure is extremely time-consuming; worse, it leaves little room for flexibility in analysis. Unstructured data often gets left out entirely because it does not fit neatly into traditional tabular formats.

Meanwhile, with data distributed on-premises and in the cloud, physical consolidation becomes difficult due to security protocols. As a result, information remains fragmented across departments, often with inconsistent definitions and naming conventions.

The human cost is enormous: data scientists are estimated to spend about 80% of their time cleaning and preparing data instead of generating insights. This effort is largely wasted because organisations still operate with isolated systems, poor data quality, and slow processing times.

The cost of poor data integration

The financial effects of poor data integration are severe. Gartner estimates that poor data quality costs organisations an average of $12.9 million annually. An IDC survey further reported that companies with 1,000 employees lose nearly $6 million each year because workers spend 36% of their day searching for information—and half the time, they cannot find what they need.

Beyond the immediate costs, experts highlight “integration debt”—compounded inefficiencies and risks arising from years of neglect or poor execution. Manifestations include duplicate data entry, reconciliation discrepancies, and missed business opportunities.

Even NASA learned this lesson tragically. The 2003 Space Shuttle Columbia disaster investigation reported that poor data management contributed to the accident, noting that NASA had “a wealth of data tucked away in multiple databases without a convenient way to integrate and use the data for management, engineering, or safety decisions.”

As 2025 approaches, the stakes are rising. With 83% of enterprises adjusting their data management priorities due to AI initiatives, smarter data integration has become a survival necessity.

How AI is changing the data integration landscape

Artificial intelligence has become the cornerstone of modern data integration strategies, solving problems that traditional methods cannot. While conventional approaches struggle with disparate systems and massive data volumes, AI enhances these processes through automation, real-time capabilities, and the elimination of organisational barriers.

Automating data mapping and cleansing

One of the most time-consuming tasks in data integration has historically been data mapping and cleansing. Studies show that data scientists spend about 80% of their time preparing data. AI revolutionises this process by automating tasks that previously required significant manual intervention.

AI-powered systems now automatically discover relationships between data fields, identify patterns, and suggest optimal transformations. Machine learning algorithms analyse data structures to map fields between source and destination systems. Over time, these systems improve through feedback and historical learning.

AI also excels at pattern recognition, making it highly effective at identifying and correcting common data quality issues. Modern AI applications automatically detect:

- Duplicate records and inconsistencies

- Missing values and outliers

- Formatting differences and standardisation needs

- Type mismatches and anomalies

These systems perform routine cleansing tasks with greater speed, accuracy, and consistency than human operators.

Real-time data ingestion and transformation

Today’s business needs can no longer be met through traditional batch processing. An estimated 80% of companies still rely on stale or outdated data for critical decisions. AI enables real-time integration by continuously processing and ingesting data as it is generated.

This provides up-to-date insights and supports timely decisions. For example, fraud detection models using real-time data can identify anomalies in milliseconds, while batch-oriented models may miss them entirely. Similarly, retail algorithms adjust prices dynamically based on live market demand.

AI platforms excel at handling streaming data by:

- Predicting incoming loads from historical patterns

- Auto-scaling to optimise resource utilisation

- Routing data intelligently based on content and priority

- Triggering downstream processes based on predefined rules

AI’s role in breaking down data silos

Data silos are among the most significant obstacles to effective AI initiatives. They hinder alignment, slow transformation, and drain value from digital innovation.

AI addresses this challenge through a data fabric—an intelligent, interconnected layer built on top of source systems. This allows data to be connected, transformed, and delivered securely without necessarily moving it.

With integrated data, AI models learn from complete customer journeys rather than fragmented snapshots. This unified foundation accelerates experimentation, simplifies deployment, and allows organisations to scale AI initiatives rapidly.

Once data is unified, governed, and accessible through AI-powered integration, organisations gain the ability to make faster, more accurate decisions while scaling AI confidently.

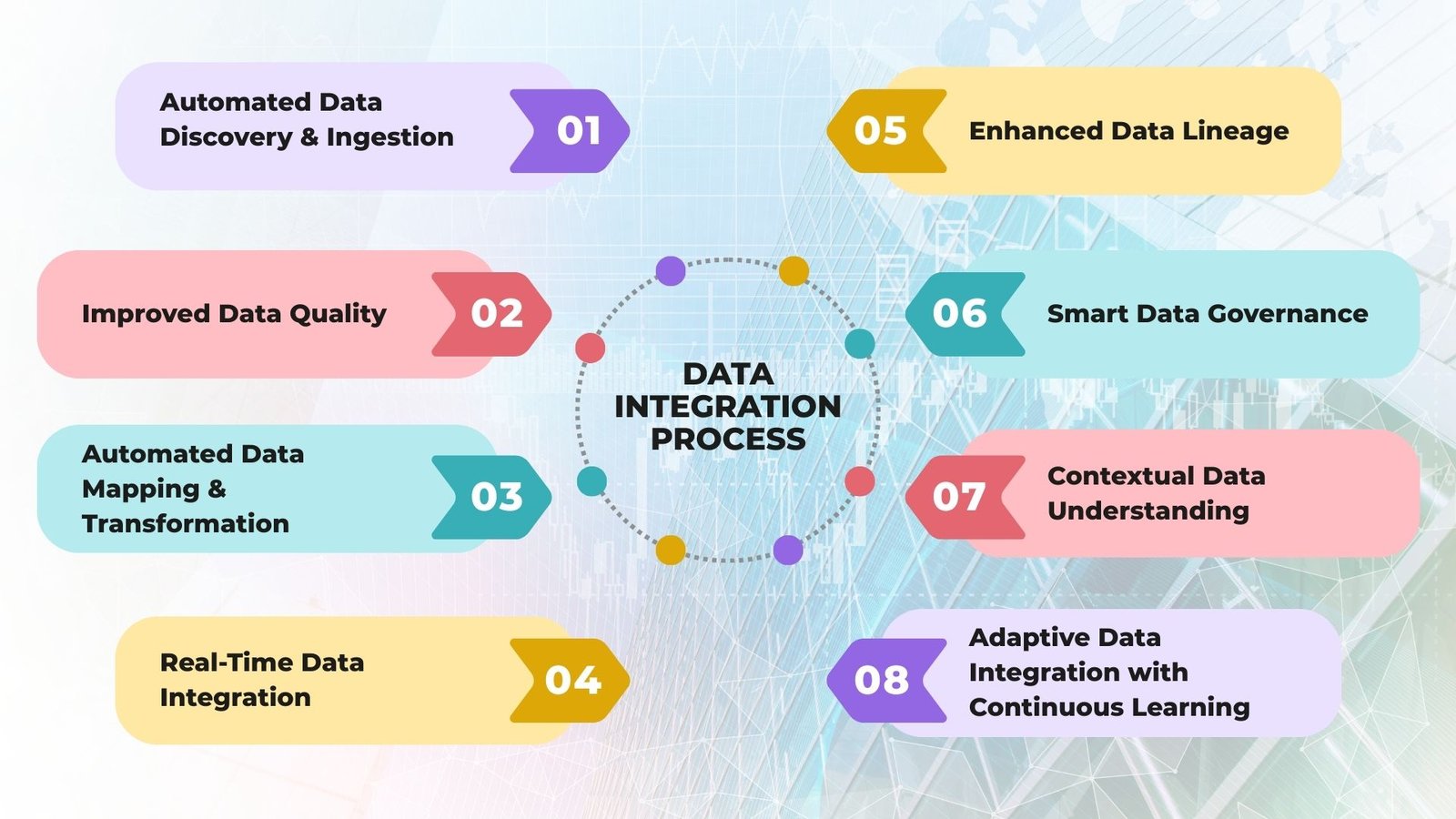

Key benefits of AI-powered data integration

AI-driven data integration delivers benefits far beyond simple automation. Organisations using these technologies are reporting transformational results across several dimensions.

Improved data quality and accuracy

Poor data quality silently drains resources, costing organisations an average of USD 12.90 million annually. AI data integration addresses this directly by identifying and correcting inconsistencies in real time.

These systems effectively resolve issues such as:

- Duplicate records

- Missing values

- Inconsistent formats

- Anomalies in data

This results in reduced operational expenses, fewer compliance issues, and improved customer service outcomes.

Faster decision-making with real-time insights

Traditional batch processing forces decision-makers to work with outdated information. AI enables real-time integration, allowing insights to be extracted within milliseconds.

The impact is especially significant in time-sensitive scenarios such as fraud detection, inventory management, and personalised customer experiences.

Scalability and adaptability for growing businesses

As businesses expand, their data ecosystems grow more complex. Traditional systems struggle to scale with increasing volume, but AI solutions adapt seamlessly without requiring constant re-engineering.

With cloud-based pay-as-you-go models and AI auto-scaling, organisations avoid unnecessary resource costs and maintain performance as data loads increase.

Improved compliance and better data governance

With the ever-expanding regulatory landscape, AI ensures intelligent governance by automatically enforcing data policies and standards. AI systems continuously monitor usage patterns to maintain alignment with organisational and regulatory guidelines.

AI can also track data flows, apply compliance rules, and support adherence to frameworks such as GDPR and HIPAA. Research shows that 63% of organisations using AI have already established governance policies, and 79% incorporate AI-related risks into enterprise risk management programs.

Common Challenges and How AI Solves Them

Businesses face several significant challenges when integrating data across their organisations. Fortunately, AI provides solutions that address these obstacles directly.

Manual processes and slow ETL pipelines

Traditional ETL requires extensive coding and specialised engineers, creating bottlenecks and knowledge gaps. Engineers spend time writing scripts, mapping fields, and resolving workflow issues.

AI transforms this environment by automating:

- Data mapping across systems

- Anomaly detection during pipeline execution

- Optimisation of transformations based on performance metrics

Data inconsistencies and errors

Poor data quality undermines business performance, costing organisations an average of $12.9 million yearly. Issues such as missing values, duplicate records, and format inconsistencies require significant engineering effort to fix.

AI improves data quality by automatically detecting and correcting these issues. In financial operations, AI systems can achieve accuracy rates as high as 99.99% for processes like invoice matching and procurement.

Disconnected systems and fragmented data

The average enterprise maintains 897 applications, with 95% reporting integration challenges. These disconnected systems create data silos that limit visibility into operations and customers.

AI resolves this fragmentation by detecting schema differences, enforcing consistency, and enabling standardised formats and automated normalisation without manual coding.

High operational costs and resource demands

Traditional integration requires high spending on infrastructure, software, and human resources. AI and automation solutions can reduce operational costs by 20–30% and increase productivity by more than 40%.

Some organisations have replaced 50 engineers with just 2–3 team members to manage the same workload using AI-driven ETL. Deloitte reports that robotic process automation reduced management reporting time from several days to one hour.

Getting started with AI data integration

Successful AI integration requires careful planning and a structured approach. The right tools and best practices significantly improve outcomes.

Choosing the right AI data integration tools

When evaluating tools, assess their ability to connect to enterprise data sources—both cloud and on-premises—for batch and real-time needs. Ensure the platform includes metadata management, strong governance, scalability, robust security, and manageable total cost of ownership.

Understanding Azure AI data integration features

Foundry Tools from Microsoft’s Azure AI offer prebuilt capabilities for rapid deployment. These include Vision, Speech, Language, and Document Intelligence services, which support integration workflows and provide flexible consumption-based pricing.

Best practices for implementation

To ensure successful AI data integration:

- Define clear business objectives before selecting technologies

- Assess data quality and accessibility early

- Start with small pilot projects

- Establish continuous monitoring and feedback mechanisms

When to Consider Custom AI Solutions

Custom solutions are necessary when your organisation’s requirements are highly specialised and cannot be addressed by prebuilt tools. Consider custom development for:

- Specialised data processing

- Real-time analytics on complex datasets

- Competitive differentiation through proprietary insights

Conclusion

AI data integration represents a fundamental shift in how organisations manage information assets. The enormous volume of data being generated requires smarter methods than traditional approaches can provide. Companies adopting AI-powered integration benefit from automated mapping, real-time processing, and the elimination of data silos.

The advantages extend far beyond efficiency. Organisations see improvements in data quality, decision speeds, scalability, and compliance. AI also resolves long-standing issues such as manual ETL workloads, fragmented systems, and high operational costs.

Implementing AI data integration requires a structured approach—beginning with assessing business needs, selecting appropriate tools, and determining whether prebuilt platforms like Azure AI or custom-built solutions are more suitable. While the journey requires thoughtful planning, the return on investment is substantial as data becomes unified, accessible, and actionable.

In a world where data is growing exponentially, AI data integration is no longer optional—it is a business imperative. Organisations that fail to modernise risk falling behind competitors that leverage real-time insights for faster, more accurate decisions. AI does not merely integrate your data; it transforms your entire approach to information management.

FAQs

What are the main benefits of AI-powered data integration?

AI-powered data integration improves data accuracy, speeds up decision making, and enables real-time insights. It automates data mapping, cleansing, and transformation, reducing manual effort and operational errors.

What are Azure AI data integration features?

Azure AI data integration features are Microsoft Azure capabilities that enable automated data ingestion, transformation, AI-driven analytics, and real-time insights using services such as Azure Data Factory, Azure Synapse Analytics, and Azure Machine Learning.

What problems does AI solve in data integration?

AI solves common data integration challenges such as inconsistent data formats, poor data quality, delayed processing, and scalability limitations. It continuously learns from patterns to improve accuracy and efficiency over time.

How can businesses get started with AI data integration?

Businesses can start by identifying key data sources, defining clear business objectives, and assessing current data quality. Running small pilot projects before scaling enterprise-wide helps reduce risk and improve adoption.

When should a company choose custom AI solutions?

A company should choose custom AI data integration solutions when it has complex data environments, industry-specific compliance needs, or advanced analytics requirements. Custom solutions provide greater flexibility, scalability, and control than off-the-shelf tools.